People continue to perpetuate the myth that containers are somehow a form of "security isolation" or some sort of sandbox even though this has been proven wrong again and again and again. You don't have to run far to run into projects like gVisor or firecracker to understand there are quite a few people with concerns about this.

The recent exploding popularity of AI agents and their dependence on both scraping using a full blown chrome browser, and inferencing through a GPU have many people revisiting this mantra and discovering that perhaps containers actually don't have a great isolation story, especially when it comes to multi-tenant workloads.

I was curious why "container isolation" is such a persistent belief though. Yes, there is indeed crazy amounts of marketing around this notion. Yes, there is the notion that people don't like to admit they have problems. I think there is something else going on here though.

I think one of the issues is that a lot of people really don't understand the scope of what a "container" actually is and does. There are a lot of kernel maintainers that feel very uneasy about how much attack surface is present in the mechanisms that are used to create a "container" as the kernel itself doesn't really have the notion per-se.

There is no "create_container(2)". There is the idea of cgroups. There is the idea of namespaces and many different varieties of namespaces but there is no "container". There is clone and unshare and things like that but as we'll describe - these are all infinitely configurable and therein lies a big problem.

There are pid, net, mnt, uts, ipc, user, cgroup, and time namespaces.

"On their own, the only use for cgroups is for simple job tracking." - Paul Menage - CGROUPS V1 - 2008 - kernel.org

There is the concept of privileged vs unprivileged containers. Of course, there are huge problems with both. To make things more confusing cgroups didn't even used to be namespaced. The original document for cgroups clearly states: "On their own, the only use for cgroups is for simple job tracking." It was a scheduling/resource tracking mechanism. They were not designed for security. I mention this because when you look at projects that *are* designed with security being a focus they are designed quite differently, with purpose and go to length to describe what they are trying to provide for.

Namespaces

Not even a month ago the Qualys Threat Research Unit discovered three different bypasses in ubuntu's unprivileged user namespace restrictions. The whole idea around unprivileged user namespaces is to provide root to users in a safer manner vs running as root which is what most people do and is highly insecure in of itself. The problem is that unprivileged user namespaces themselves have been repeatedly used to exploit kernel vulnerabilities. These three bypasses defeated countermeasures against that.

If you found the prior paragraph confusing - you are not alone - it is total keystone cops.

When people start talking about container isolation they are typically referring to namespaces with the addition of some seccomp (seccomp additionally lacks a lot of luster but that is a different article).

API Abuse

One of the big problems here is that each namespace opens up a wide surface of attack. It isn't just the fact that you need to look on the kernel side of things to ensure things are "secure". You also need to look at how programs use and abuse these facilities. A lot of the problems that have been discovered and fixed in the past so many years were because of issues like race conditions (one process/thread updating something in an unexpected order or frequency than another process/thread for instance) or doing things like simply not restricting something to a directory that it should have been.

What I'm trying to say is that many of these problems are not even the kernel's fault per-se - it's the abuse of the very large API that exists. That in my opinion is one major reason we see the security challenges with containers.

The analogy here is kinda like - the kernel did all the framing for a house but left the "container" software up to do all the siding, roofing, windows, and doors. It's like the three little pigs. One had a house made of straw, one made of sticks and the other of bricks. Except... in this world none of them are made of bricks.

The other really large problem is that containers share a kernel. You'll hear docker aficionados repeat this and yet they don't seem to understand what that actually means. What it means is that the exact same bug that's running in a system with multiple containers is much more dangerous than one that is running under different virtual machines because they don't share the same kernel. You can't immediately attack all the other software running on different hosts if you break the kernel.

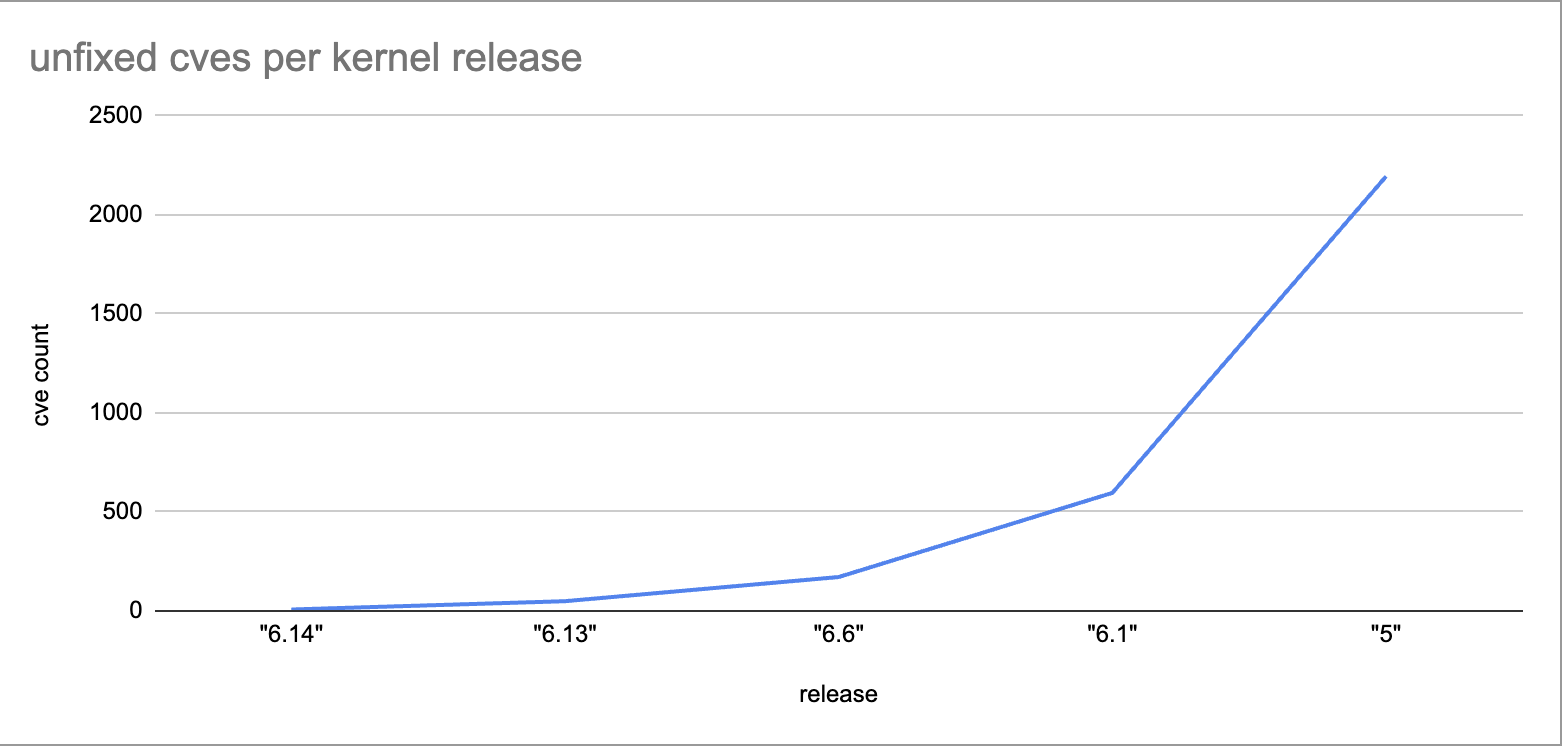

I mentioned this the other day on the socials but ever since the linux kernel became it's own CNA they have been publishing tons of cves. There's something like 615 CVEs this year alone. Also, it was brought up earlier a few months ago, and Greg K-H has acknowledged, that a lot of earlier kernel releases that are still in LTS support, for security reasons, aren't actually getting security updates cause ... wait for it... linux is a volunteer project and no one has stepped forward to do it, despite the fact that the recent RSA conference drew in an estimated 44,000 attendees.

Think about that.

Here's my shitty little google sheets graph of what this looks like:

I don't have the time to go through and iterate all the ones since 2008 or 2012 or 2015 so let's just look at a handful of vulnerabilities from last year up to now to get a better idea of what we're actually looking at here.

Container Related CVEs from 2024 Through May 2025

If you start out you might look for things that mention cgroups perhaps and that doesn't seem so bad:

Cgroup CVEs

The first one we're greeted with use a UAF. I can hear the crabs in the audience rubbing their pincers together.

Moving on we might start digging into various namespace related ones:

Namespace CVEs

Ah, CVE-2024-50204, we're trying to free a mount namespace too soon. The second one we run into an issue when removing a net namespace.

Then we might look at popular versions of "containers" - remember - we're not just talking about docker here. Remember earlier when we were stating that there is no "create_container(2)"? This causes all sorts of hell to break loose.

Container CVEs

- https://www.cve.org/CVERecord?id=CVE-2024-9676

- https://www.cve.org/CVERecord?id=CVE-2024-3056

- https://www.cve.org/CVERecord?id=CVE-2024-29018

- https://www.cve.org/CVERecord?id=CVE-2024-21626

In CVE-2024-9676 we see a symlink traversal vulnerability that affects Podman, Buildah, and CRI-O - a triple KO. These path traversal issues are extremely common in container land. CVE-2024-21626 is interesting as it had multiple complete container escape attacks because runc was leaking fds. You would think that since every engineer has to deal with files that this sort of attack is not super common but just like our friend the symlink traversal it's super common with containers. Keep in mind - this was not a kernel issue - this was an issue in Go code. This is what I keep pointing at - there is this really horrible bifurcation of responsibility when it comes to containers.

Then we get to moby, or docker, or whatever you want to call the cartoon whale.

Moby / Docker CVEs

- https://nvd.nist.gov/vuln/detail/CVE-2024-36623

- https://nvd.nist.gov/vuln/detail/CVE-2024-36621

- https://nvd.nist.gov/vuln/detail/CVE-2024-36620

- https://nvd.nist.gov/vuln/detail/CVE-2024-41110

- https://nvd.nist.gov/vuln/detail/CVE-2024-32473

- https://nvd.nist.gov/vuln/detail/CVE-2024-29018

- https://nvd.nist.gov/vuln/detail/CVE-2024-24557

It should be noted that software such as Docker can and will share similar or the same problems as other container software depending on what level the bug is at, however for this comparison I tried to keep it based on docker specifically.

Kubernetes CVEs

There have been 7 cves issued for kubernetes this year alone:

- https://www.cve.org/CVERecord?id=CVE-2025-1974

- https://www.cve.org/CVERecord?id=CVE-2025-1098

- https://www.cve.org/CVERecord?id=CVE-2025-1097

- https://www.cve.org/CVERecord?id=CVE-2025-24514

- https://www.cve.org/CVERecord?id=CVE-2025-24513

- https://www.cve.org/CVERecord?id=CVE-2025-1767

- https://www.cve.org/CVERecord?id=CVE-2025-0426

I didn't list the 79? that involved k8s last year.

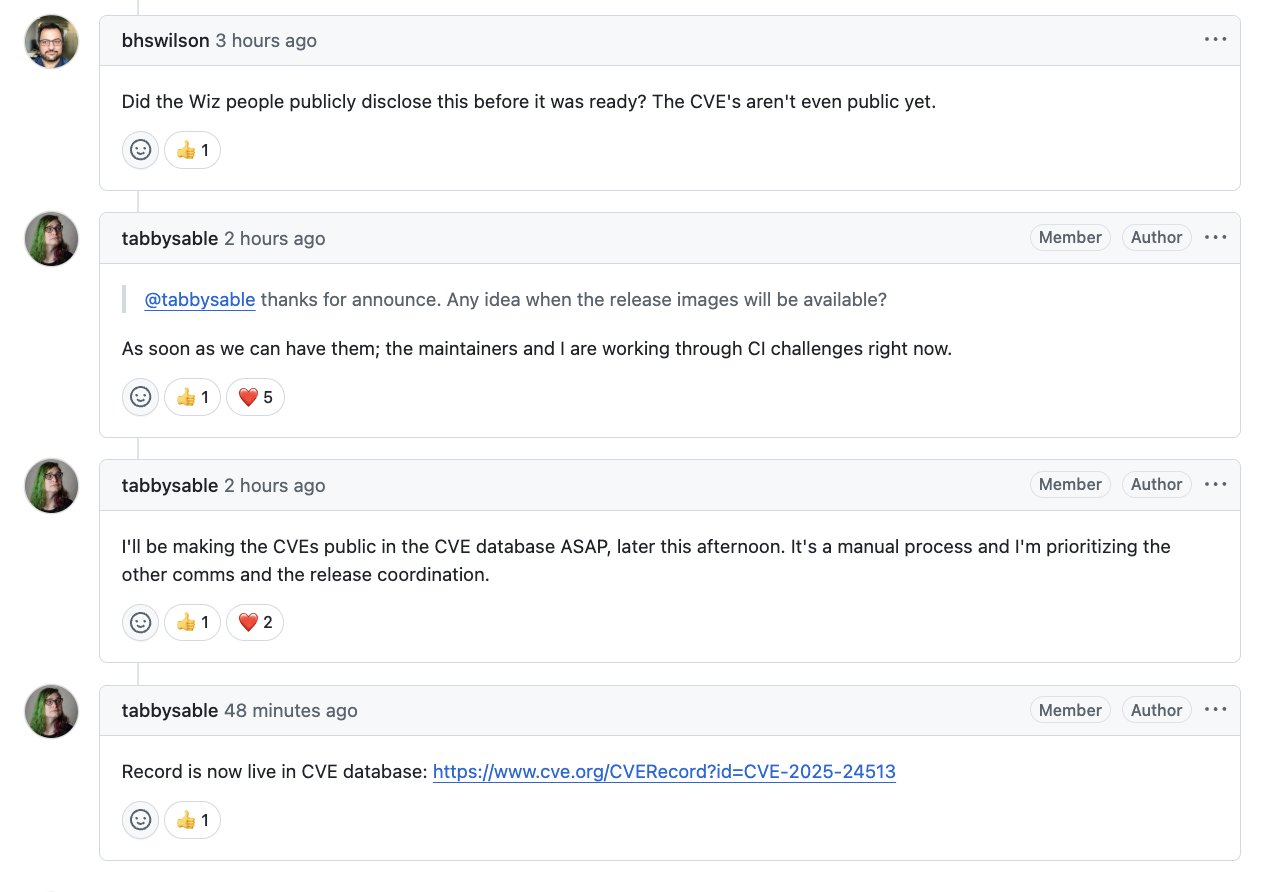

The very first one on the list, CVE-2025-1974 is the aptly named "Ingress Nightmare" (you know it's important if it gets a nifty name to go with it) and it turned into a total shit show because it was disclosed before the cves were even public (it was a set) and before there was a fix pushed. Nightmare indeed. Hooray for responsible disclosure.

Of course k8s doesn't exist in isolation. No - the loud and proud CNCF landscape sports over 200 additional projects of which form part of the "cloud native ecosystem".

Cloud Native Ecosystem CVEs

- https://www.cve.org/CVERecord?id=CVE-2025-46342

- https://www.cve.org/CVERecord?id=CVE-2025-29781

- https://www.cve.org/CVERecord?id=CVE-2025-24376

- https://www.cve.org/CVERecord?id=CVE-2025-24784

- https://www.cve.org/CVERecord?id=CVE-2024-7598

- https://www.cve.org/CVERecord?id=CVE-2024-48921

- https://www.cve.org/CVERecord?id=CVE-2024-43803

- https://www.cve.org/CVERecord?id=CVE-2024-39690

- https://www.cve.org/CVERecord?id=CVE-2024-39690

- https://www.cve.org/CVERecord?id=CVE-2024-31989

- https://www.cve.org/CVERecord?id=CVE-2024-13484

If it's the first time you're seeing this wall of death keep in mind this is just from last year and the start of this year. This list gets very big very fast if you start going back more than a year or so.

As mentioned, K8s security disclosures have been a bit of a mess lately too:

Since the CNCF ecosystem is so large there is a lot of software that is considered "best practices" that are heavily installed in k8s clusters. This extends the existing attack surface by quite a lot. A lot of it exists because they are reinventing the wheel.

Indeed when you watch k8s users deploy software - they are never just deploying one piece of software - they end up deploying like 20 pieces of software. Something to do auth, something to do ingress, something to do auditing, the list goes on and on and on and on. It is labeled resume driven development for a reason.

If you click on any of these CVEs you can start to see the types of security issues that exist and while I didn't try to break down each of these into their respective cardinalities you can easily tell that a lot of this is what I was talking about earlier - it's that "containers" are really one big sloppy joe of an api that is poorly designed WITHOUT SECURITY IN MIND and it's not even defined - it is a choose your own adventure up to the whims of some random product manager working at one of the numerous companies pushing this onto the unsuspecting public.

Common Weaknesses of Containers

I put together a short but not complete list of CWEs that show some of the common weaknesses exhibited by the container ecosystem and as you can tell a lot of it has nothing to do with the kernel at all:

- CWE-22 - improper limitation of a pathname to a restricted directory

- CWE-362 - race conditions

- CWE-284 - improper access control

- CWE-285 - improper authorization

- CWE-863 - incorrect authorization

- CWE-327 - use of broken or risky crypto algorithim

- CWE-400 - uncontrolled resource consumption

- CWE-669 - incorrect resource transfer

- CWE-476 - null pointer dereference

- CWE-863 - incorrect authorization

- CWE-444 - inconsistent interpretation of http requests

- CWE-187 - partial string comparison

- CWE-668 - exposure of resource to wrong sphere

- CWE-346 - origin validation error

- CWE-346 - insufficient verification of data authenticity

- CWE-416 - use after free

- CWE-403: exposure of file descriptor to unintended control sphere ('file descriptor leak')

I know someone will say "any popular software is going to have a lot vulnerabilities" and I would totally agree but, again, when you start comparing to other infrastructure paradigms such as a plain old vanilla ec2 instance the trust boundary is at the vm layer so you are immediately preventing kernel bugs from jumping host to host automatically. You also are immmediately not having to deal with the rest of the ecosystem bugs that if exploited could give you access to the other infrastructure because they take advantage of the fact that they are a part of said cluster. In this regard containers are doubly dangerous in this way.

Keep in mind - for all of this we've only talked about issued CVEs - not the cryptojacking or the fact that there are companies now marketing on "zero cve images" since practically every docker image you could look at is chock filled with other cves that don't have anything to do with container cves at all.

We also can't talk about all the security issues that exist but haven't been identified with a CVE yet - cause that's not something that cve does.

Docker and kubernetes were never designed to be a sandbox or an isolated environment. If you like to use them because they make things easy for your engineering department knock yourself out but don't be fooled into thinking these things ever were or ever will be a security boundary.

Stop Deploying 50 Year Old Systems

Introducing the future cloud.