No one that I know of is really tracking container security issues. I've mentioned on a few occasions that most of the container security issues we see aren't really anything to do with the kernel as the kernel does not actually provide "containers" - it's all these various runtimes that do. I wanted to quantify this somehow and I kinda fell into a rabbit hole because there are just endless amounts of security issues in the broader container/k8s ecosystem.

This was actually not super easy to put together. Both docker and kubernetes are CNAs so you could look at only the cves they publish but then you have to consider the fact that a lot other runtimes exist such as podman/runc also exist. To top it off with a lot of the broader container/k8s ecosystem enables really bad security issues like escapes because of how all of this operates.

Searching for 'container' is also a bit difficult to do because it conflicts with existing terminology in cve records.

I also tried to get this article out fast once I decided I was going to write it but new stuff just kept coming up.

When I was looking at this I tried to set some ground rules. I'm really focused in on direct security issues involving specific container runtimes and kubernetes but it's impossible to ignore the rest of the ecosystem that has severe issues as well, so while I might mention those, they are not the focus. Also, I'm not going to touch at all on "images that have cves". This is a well understood problem domain that many companies are heavily entrenched in and offer so-called "zero cve" images. It's a problem, but I just don't care about it for this specific purpose.

Just to be clear - don't read these lists as "authoritative" - again no one is really tracking this ecosystem in the degree, fidelity and scope of what I'm interested in so I most definitely have missed many that you might find and I might have categorized something differently that what you might, based solely on a judgement call, as again, many of the types of attacks aren't clearly spelled out and surface in the data. You have to look at the weakness, the code and determine what is actually possible.

In certain cases I've chosen to ignore entire categories of security issues as, while they are important to address, I just don't care enough about them. So we won't be looking at any XSS or dos style issues. I will say when trying to categorize things dos attacks were right up there with infoleaks. Infoleaks were hands down some of the most problematic security concerns being reported as cves in terms of just sheer numbers but I didn't want to turn this into a listicle so I've chosen to largely leave those out as well.

Also, be aware that typically many of these have multiple weaknesses allowing for a variety of scenarios to play out - so one type of vulnerability might allow for privilege escalation, data exfil, and infoleaks. It is almost never the case that it's just one or the other - it's typically many problems. So you typically want to try and take a top down view of the threat. For instance in the case of a container escape - all bets are off - the infrastructure is just completely hosed at that point.

There were actually quite a lot of cves that don't get any attention at all - in fact you won't find any blogposts on them.

I was going to look at the broader 'cloud native' ecosystem but honestly - it's just way too much.

16 Container Escapes

I counted sixteen container escapes from 2025 into the beginning of February. Container escapes are probably the worst type of vulnerability a container ecosytem can have since it destroys the entire architecture.

Eight of these were in actual container runtimes while the others were in things like orchestrators which exposed the runtime to an escape. This again, is the problem with container security is that there is no singular definition of what a container actually *is* and even if that were the case the orchestrators come in and completely rewrite the rules.

Half of the Escapes Weren't Cause of the Runtime and Zero were Kernel Related

This is a really important point - half of the container escapes I saw weren't even because of the container runtime and literally ZERO of the container escapes I saw were because of kernel related issues.

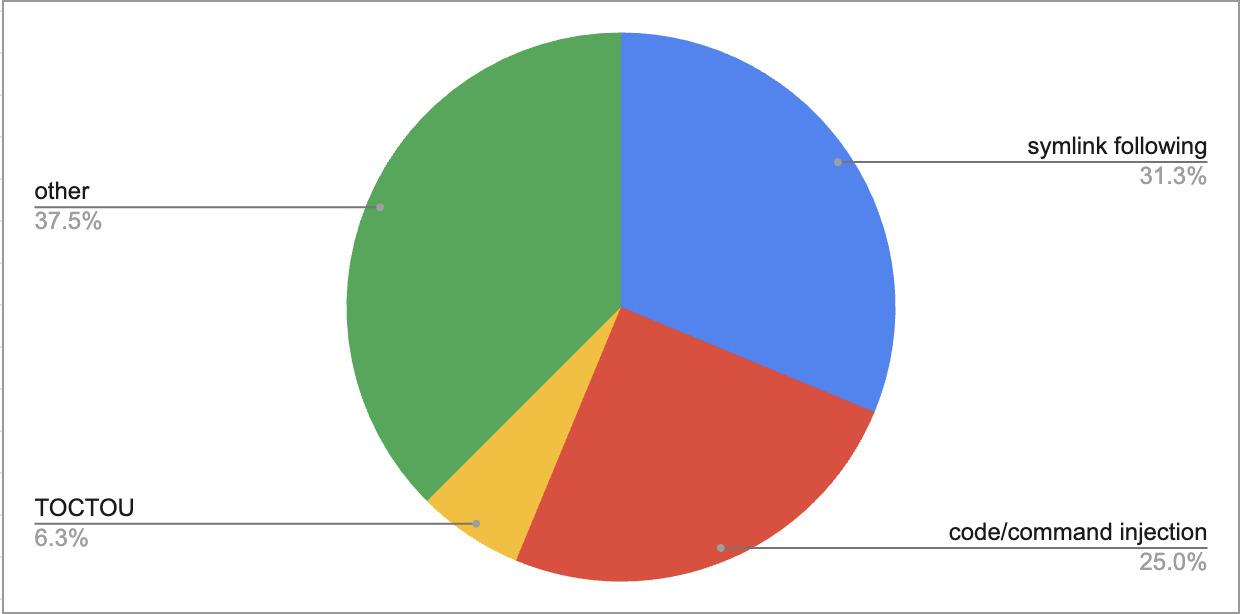

So what are the most common causes of container escapes from what we saw last year?

While seeing symlink issues be the number one culprit in the container ecosystem for escapes is not surprising, I was surprised to see only one TOCTOU (time of check to time of use) vulnerability - typically these are much higher percentages. Various code and command injection took second place - mostly found in other software that orchestrates containers. This was an interesting insight as I routinely see folks on HN comment about how "containers are just linux processes" and you can just run them yourself. While you certainly can run them yourself without knowledge of the various methods attackers use against them you are probably going to get owned. We saw this quite a lot in CI build sytems, infrastructure tools, folks that think containers are a form of a sandbox (they are not) and others.

- CVE-2025-31133 - cwe-61 - symlink following

- CVE-2025-23266 - cwe-426 - untrusted search path

- CVE-2025-3224 - cwe-59 - link following

- CVE-2025-34025 - cwe-732 - incorrect permission

- CVE-2025-34159 - cwe-94 - code injection

- CVE-2025-47290 - cwe-367 - toctou

- CVE-2025-52565 - cwe-61 - symlink following

- CVE-2025-53372 - cwe-77 - command injection

- CVE-2025-54867 - cwe-61 - symlink following

- CVE-2025-57802 - cwe-61 - symlink following

- CVE-2025-58766 - cwe-94 - code injection

- CVE-2025-59156 - cwe-78 - os command injection

- CVE-2025-62161 - cwe-61 - symlink following

- CVE-2025-64507 - cwe-269 - improper privilege management

- CVE-2025-69426 - cwe-798 - hard-coded credentials

- CVE-2025-9074 - cwe-668 - exposure of resources to wrong sphere

Now when you are looking at the "causes" - one thing you'll notice with cve publications is sometimes the cwe is picked somewhat randomly. Sometimes there are multiple weaknesses and only one is chosen. Sometimes a similar weakness is chosen instead of a more common one. Furthermore, there are no rules to say that something should have the word "escape" in the description so if you are merely searching for these you probably won't find them. You have to actually understand what is going on with the vulnerability first but anytime you can start executing or modifying things on the host - you are toast - that is an escape.

So when categorizing these and the rest of these lists understand that I've actually looked at the affected code and made a judgement call myself.

8 Remote Code Executions

I counted eight remote code executions. Many of these that were affecting orchestrators, ci systems would end up giving access to the entire cluster. They also serve as potent initial steps for much more serious issues as many of these were highly privileged. I've separated these from the next chunk of issues that I didn't immediately confirm whether or not they were remote or not. Regardless, a number of these can lead directly to an escape.

- CVE-2025-27519

- CVE-2025-30206

- CVE-2025-48710

- CVE-2025-66626

- CVE-2025-69201

- CVE-2026-23520

- CVE-2026-24841

- CVE-2026-24129

29 Command/Code Injections

I counted twenty-nine command/code injections. Command injection is a lot more serious of an issue here than simply code injection but cause I don't want this blogpost to go for hundreds of pages I simply grouped both here. If you're curious about the difference between command injection and code injection and how they differ/intertwine we've written about it here.

Like the group above, some of these were ranked 9 and 10 because the code or command injection enabled almost complete ownage of a cluster. Path traversals were a key problem area here along with the fact that there is a lot of configuration injection that leads to the attacker being able to run commands or code.

- CVE-2026-24512

- CVE-2026-1580

- CVE-2026-24740

- CVE-2025-32445

- CVE-2025-29922

- CVE-2025-29778

- CVE-2025-13888

- CVE-2025-1974

- CVE-2025-24030

- CVE-2025-24514

- CVE-2025-1097

- CVE-2025-14707

- CVE-2025-36354

- CVE-2025-36355

- CVE-2025-64419

- CVE-2025-53355

- CVE-2025-53376

- CVE-2025-53542

- CVE-2025-53547

- CVE-2025-59359

- CVE-2025-59156

- CVE-2025-59360

- CVE-2025-59361

- CVE-2025-59376

- CVE-2025-59377

- CVE-2025-59823

- CVE-2026-23742

- CVE-2026-23953

- CVE-2026-24905

17 Privilege Escalations

I counted seventeen privilege escalations. Again, a lot of these could've been grouped under a different group and many of these are quite severe (full cluster takeover) but gotta group them somehow.

- CVE-2025-36356

- CVE-2025-2241

- CVE-2025-2843

- CVE-2025-32955

- CVE-2025-36356

- CVE-2025-4563

- CVE-2025-53945

- CVE-2025-55205

- CVE-2025-57848

- CVE-2025-57850

- CVE-2025-57852

- CVE-2025-58712

- CVE-2025-59291

- CVE-2025-62156

- CVE-2025-7195

- CVE-2025-9164

- CVE-2026-23990

14 Auth Bypass

I counted fourteen auth bypasses. This is in a similar vein to the prior section but usually connotates that there was no auth to begin with versus "escalating" an existing privilege. Not surprisingly, we find some of the frameworks that are ostensibly made to ensure this doesn't happen allow it to happen:

- CVE-2026-22822

- CVE-2025-27095

- CVE-2025-25196

- CVE-2025-25198

- CVE-2025-27403

- CVE-2025-29922

- CVE-2025-64171

- CVE-2025-64432

- CVE-2025-64436

- CVE-2025-64437

- CVE-2026-22039

- CVE-2026-22806

- CVE-2026-24748

- CVE-2026-24835

Categorizing Container Security Issues is Somewhat Difficult

Finally, many of the vulnerabilities I ran into go under the radar. A lot of very important vulnerabilites such as being able to escape a container don't receive much attention because, thankfully most people aren't exposting endpoints to the raw internet so 'remote roots' don't really happen - it doesn't mean this isn't still highly problematic though.

The kernel has been publishing a lot of cves the past few years but very few of them become "hot" issues for containers - most of the vulnerabilities we see are still because of runtime issues. This is what I've always observed and was glad to see the data matches up with that observation.

Some container issues don't even receive cves such as DockerDash even though it provides for RCE!

Something we are starting see more and more of are people who are using containers but choose to do their own orchestration. Something as simple as not validating a service that is used off the shelf (which is kind of one of the main points of using containers) can add a volume mapping which makes it easy to escape. In this realm we saw ci systems, workflow systems, anywhere where shared templates were being used, as posing significant issues.

For instance there were lots of random container escapes that have nothing to do with the runtime like CVE-2025-58766 in Dyad that allows escape.

There were more than a few issues such as CVE-2025-57848 - a privilege escalation issue because the build system was just janky. This is one of those types of issues that again, doesn't have much to do w/the runtime itself but is so easy to abuse - in this case an entire *pipeline* of containers.

There are Many Container Runtimes That Can Be Abused Differently

We saw container escapes both in runtimes you're probably aware of but also in other runtimes including the following:

- docker

- containerd

- runc

- nvidia container runtime

- youki

Important to note that there are many other runtimes as well - just because we didn't see escapes in them doesn't mean they don't exist nor does it mean we didn't see other serious problems with them:

- singularity

- cri-o

- podman

- crun

- runhcs

- apple container

I don't want to beat this horse to death but just to reiterate - this is one of the things that makes securing containers really hard. There is no one singular definition of what a container is - it's really up to the runtime itself. Some of these runtimes are built on top of each other and some are totally different. That's why many of these cves will not affect other runtimes and sometimes multiple runtimes are affected but others aren't.

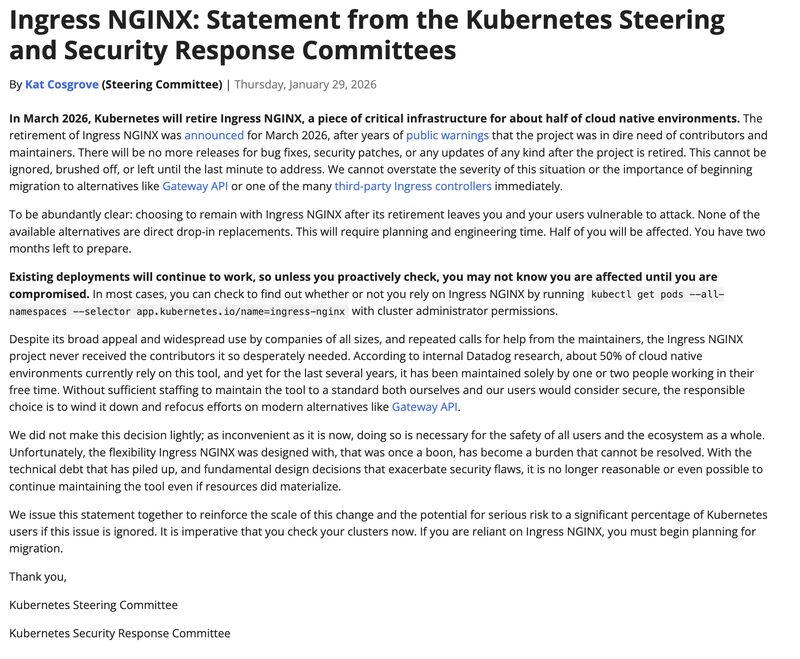

Kubernetes Amateur Hour

Remember when I mentioned that I was trying to get this blogpost out the door but "things kept happening". Yeh, you can thank the k8s people for that. At the end of January the k8s people released a very hostile and condescending letter indicating that they were going to retire ingress nginx, even though half of their user base was still using it. Speculation was rife with why the letter was so hostile and it only took about a week to know why.

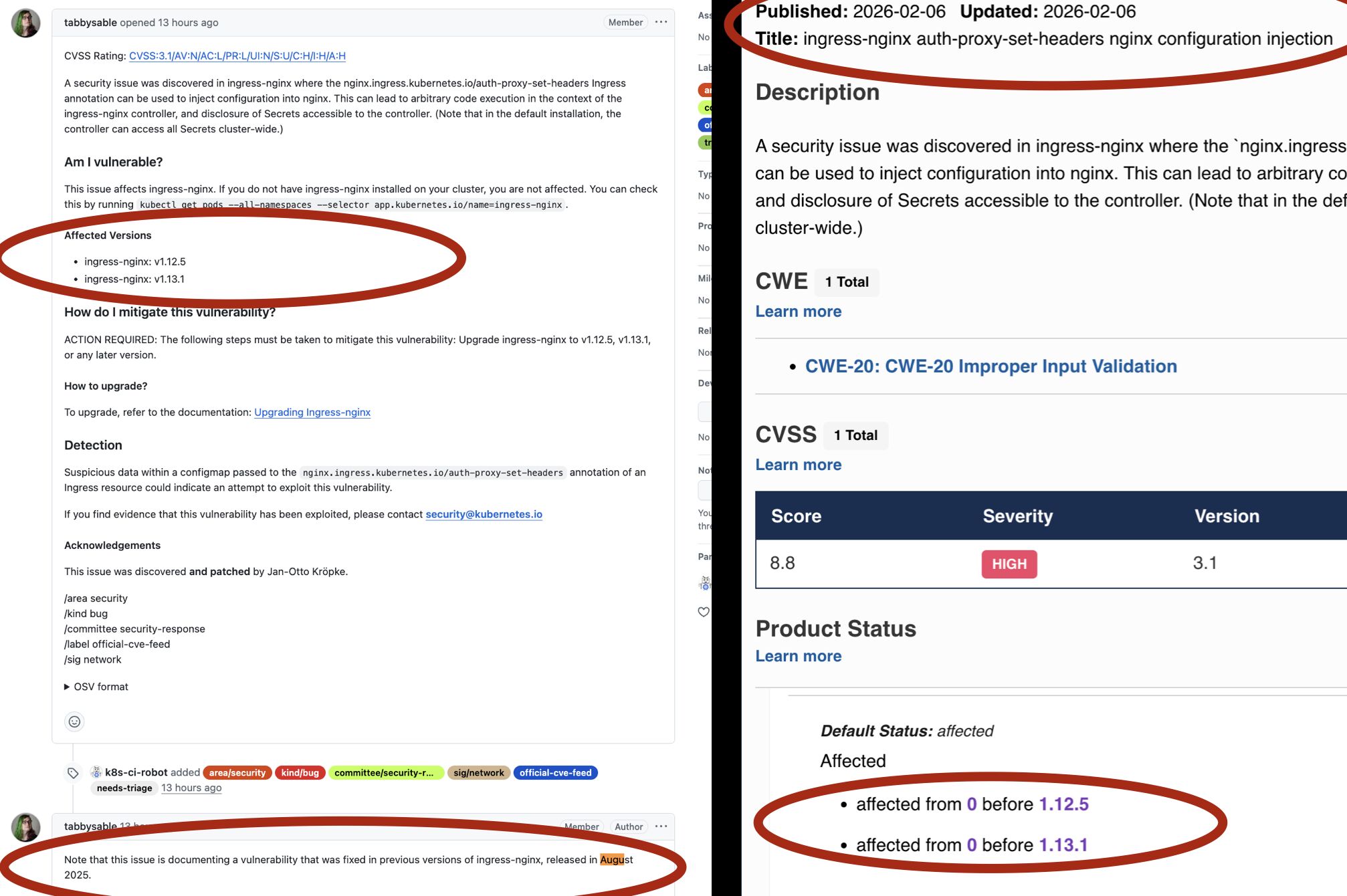

After this letter was published four cves for ingress-nginx were published: CVE-2026-24513 , CVE-2026-24514, CVE-2026-24512, CVE-2026-1580. Auth bypass, config injection, and dos. None of the builds were ready to use at the time of the announcement and none of the data was in NVD or MITRE. This means that all those fancy scanners your company pays megabucks for could not actually scan to see if you were affected. It'd be one thing if this wasn't the first time that that it happened - but it happens all the time with the kubernetes people.

Then about a week later we saw them do this again, announcing a new cve, which was actually a much older cve that they had already patched in August but failed to inform the community about. In the announcement they also mixed up the versions which makes it hard for scanners (and the humans who operate them) to know if you are affected or not.

This repeated behavior has caused a lot of bewilderment amongst their more security concious userbase.

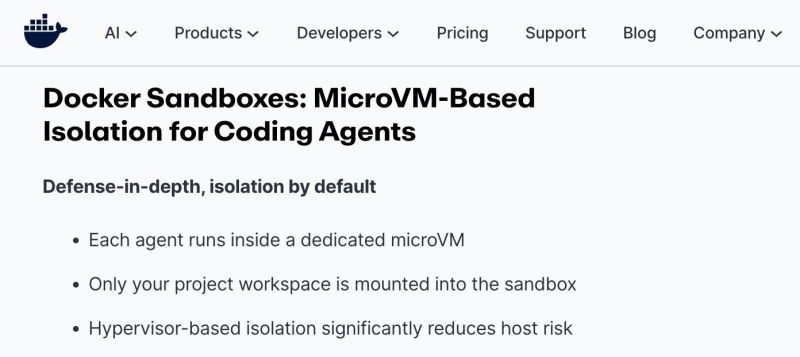

Docker Finally Admits Containers Don't Contain

Since a bunch of gen-ai companies started astro-turfing the concept of agentic coding into being, plenty of focus has been on the god awful state of sandboxing, in particular, around the so-called agents. Docker decided to jump on this bandwagon by throwing Moby under the bus and declaring that you must use their microvm based sandbox for safety.

They also jumped on another bandwagon - the 'zero cve image' train, which as we've shown in the past is a small step forward but doesn't really make any profound changes.

Not to be out done - last year Apple finally announced their own container runtime which... wait for it... houses containers inside of vms.

Container Vulnerability Gems

We saw gems like CVE-2025-55205 which is a project explicitly built to run multi-tenant workloads in k8s, which is a very very bad idea. If you're company is doing this you should stop. It weighed in at an appropriate 9.1.

Some of the headlines last year were completely ridiculous such as nvidiascape that took a whopping 3 lines of code:

FROM nvidia/cuda:latest

ENV LD_PRELOAD=/tmp/malicious.so

COPY malicious.so /tmp/

There were so many problems with various software in the k8s world where the software was totally broke in a manner that it was supposed to protect such as CVE-2026-22039.

It is actually really hard to write a container runtime correctly - if you don't really know all the intricate details of what the various namespaces do and you don't understand the types of attacks that can happen you are basically entering a world of pain.

RBAC and network policies are typically "musts" in a k8s environment as standard namespace "isolation" in k8s isn't really isolation at all.

What Do We Do With Container Vulnerabilities?

Containers are an inherently broken security architecture.

The problem here is that at a certain size even if you aren't running "multi-tenant" workloads - at a certain size in engineering you effectively are. If your engineering org is north of say 50 or 100 engineers you are effectively multi-tenant even if your company is the only user of the infrastructure. At that size containers simply are not a sound judgement call.

None of the security issues in this list are 'new' in the sense that they don't occur regularly in the container ecosystem. They happen all the time because containers are just fundamentally unsafe to be used in production environments. One thing that I'd like to point out since the AI agent push has made everyone pop on the firecracker/microvm bandwagon: Unikernels don't offer stronger isolation just because they are virtual machines. Firecracker itself, offers no extra security as compared to a normal vm running on ec2. Unikernels have many more architectural constraints like the complete lack of users, an interactive userland via a shell, or the ability to run other programs on the same instance as the only one that is currently running.

Even if you think your engineering org is not ready for unikernels but you'd like to introduce unikernels to your team reach out and we're happy to jump into the weeds with your team.

Stop Deploying 50 Year Old Systems

Introducing the future cloud.

Ready for the future cloud?

Ready for the revolution in operating systems we've all been waiting for?

Schedule a Demo